Generative AI in Patent Practice: What We Learned in the 🖥️IP Business Talks Live Session with Sebastian Goebel

Generative AI is no longer a curiosity in IP — it’s a working tool that is reshaping how patent professionals read, reason, and write. In our recent 🖥️ IP Business Talks live session with patent attorney and long-time AI practitioner Sebastian Goebel, IP Subject Matter Expert at the IP Business Academy, we cut through the hype and focused on what actually works today, what does not (yet), and what this means for the broader role of IP experts. The central message was simple but powerful: AI does not write your patent; it can significantly strengthen how you draft, prosecute, and manage quality — if you redesign your workflow around it.

Here you can watch the recording of the live talk on LinkedIn

Sebastian recently conducted a seminar on generative AI for patent attorneys at the German Association of Patent Attorneys, with over 70 participants, and we talked about this event and his experience.

From hype to workflow

One of the most common misconceptions we discussed is the idea that a model can “do the drafting.” The practical reality is more nuanced: large language models (LLMs) are augmenters that accelerate analysis, structuring, and iteration — but they depend on your expertise, your prompts, and your evidence base. In other words, they assist the professional; they don’t replace the profession. That distinction matters for quality, for ethics, and for client trust. Sebastian has been intensively involved with the use of AI in patent processes for many years. You can find his page on the digital IP Lexicon 🧭dIPlex on Streamlining Patent Drafting.

Where AI helps today—concretely

Goebel emphasized use cases that immediately reduce friction without compromising professional standards:

- Triage and comprehension: semantic search across prior-art sets and office records; “ask-your-data” question-answering over firm repositories; rapid extraction of claim features and argument anchors. These steps turn hours of skimming into guided reading.

- Prosecution drafting support: first passes on office action responses, structured argument trees, or claim-chart scaffolds that a human then tests, tightens, and validates against the file history. The “low-hanging fruit,” Goebel noted, is often here rather than in greenfield drafting.

- Consistency & quality control: harmonizing terminology, spotting logical gaps between claim language and embodiments, or flagging specification contradictions — tasks where pattern-matching at scale is genuinely helpful.

The lesson: start where the legal and technical uncertainty is lower, let AI compress the repetitive analysis, and reinvest the saved time in strategic judgment.

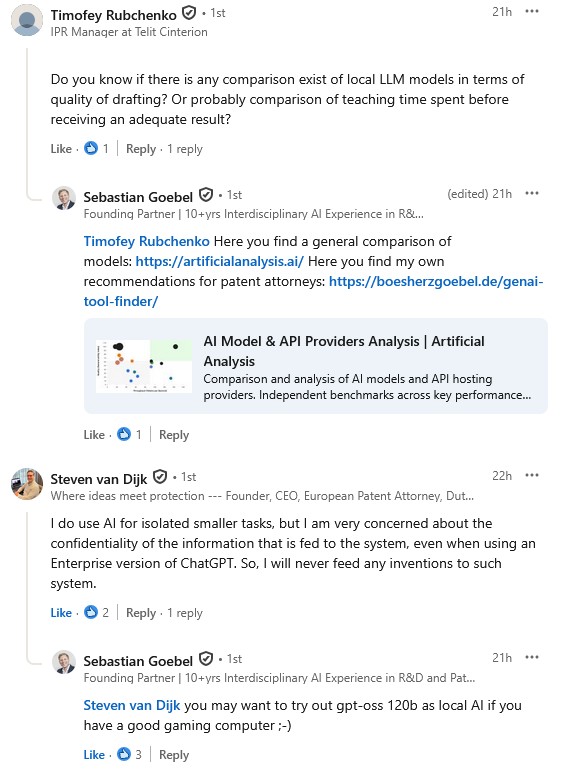

Confidentiality, regulation, and the on-prem option

For European practitioners — especially in Germany — professional rules and confidentiality obligations make unrestricted cloud use challenging for drafting scenarios. Many firms therefore evaluate local (on-prem) LLMs that keep data inside their perimeter. Goebel’s firm has run local systems for years with positive results — provided you have the IT maturity to operate and update them. He also pointed to rapidly improving small-form AI hardware that makes in-office private inference increasingly practical, which lowers the barrier to adoption for boutiques as well as larger practices.

This is not a “cloud vs. local” ideology; it’s about matching risk, capability, and use case. For low-risk document tasks and internal knowledge querying, managed cloud can be acceptable under the right terms; for client-sensitive drafting, on-prem often wins. The governance model — not the brand name — decides.

The productivity paradox and the format shift

Why do some teams feel slower after introducing AI? Because early phases demand investment in process change: digitizing repositories, standardizing naming, writing playbooks, and learning prompt and review patterns. The conversation drew a useful analogy to the early PC era, when firms invested for years before aggregate productivity visibly moved. The same holds for today’s LLMs: benefits follow once the workflow itself is reinvented, not when we force new tools into old formats. Think of the shift from “reading a newspaper on a screen” to native digital news formats — value appears after the format changes.

There was an intense discussion on LinkedIn during and after the live talk:

Model landscape and economics: open, closed, and competitive tension

Beyond tools, we touched on market dynamics. Open-source model families continue to pressure closed platforms on cost-performance, giving firms credible private, fine-tunable baselines for on-prem use. In parallel, hyperscalers iterate quickly in the cloud, focusing on breadth of integrations. Goebel’s point wasn’t to predict winners; it was to remind us that choice is expanding, and that strategic interests of vendors (and their investors) will keep the ecosystem competitive. For users, that competition is a feature, not a bug.

What AI cannot do (and why that’s reassuring)

If LLMs sound convincing, it’s because they produce plausible language, not because they reason like humans. Plausibility is not truth; coherence is not validity. Models reflect their training distributions and can surface bias or hallucinate structure where none exists. That’s precisely why the patent attorney’s role does not shrink — it shifts toward orchestration, verification, and judgment. Skilled practitioners design prompts, constrain context, set review gates, and own the final legal and technical conclusions.

From tools to transformation: what this means for IP experts

Here’s the deeper implication. Generative AI forces three simultaneous moves for IP experts:

- Method redesign: Break large, ambiguous tasks (e.g., “draft the response”) into narrow, checkable sub-tasks (extract features, align with prior art distinctions, propose claim amendments, test enablement language). LLMs excel at sub-tasks when you supply structure and evidence.

- Knowledge engineering: Curate private corpora (office actions, successful arguments, style guides, domain-specific glossaries) and wire them to retrieval pipelines. Your model is only as good as the institutional memory you feed it.

- Governance first: Decide what stays local, what can be cloud, and what never leaves a secure enclave. Document decisions, log usage, and review outputs. Treat prompts and system configurations as compliance artifacts, not just tech toys.

For in-house IP and outside counsel alike, this also reframes collaboration. Business units will expect faster cycles and clearer rationales; AI can help you explain trade-offs (scope vs. support, breadth vs. risk) with evidence pulled straight from your corpus. The more you codify that reasoning, the more teachable your practice becomes — for humans and machines.

Practical operating model (that actually works)

If you’re building an AI-enabled patent workflow, anchor it in these principles:

- Data hygiene before model magic: Organize prosecution files, normalize metadata, and maintain versioned templates. Garbage in, garbage out — just faster.

- Private retrieval over public recall: Use retrieval-augmented generation (RAG) from your controlled repositories. The model should cite your sources, not invent them.

- Task-specific chains: Create short, reusable prompt chains for repeatable steps (e.g., “extract distinguishing features,” “generate § 112 support sentences,” “map claim elements to embodiments”). Keep each chain auditable.

- Structured human review: Require a named reviewer, checklist, and sign-off for each AI-assisted output. The point is not to “trust the model”; it’s to trust the process.

- Local first for sensitive work: Default to on-prem for drafts and client identifiers; consider cloud only with explicit contractual and technical safeguards.

- Measure what matters: Track cycle time, defect types, and downstream prosecution outcomes — not just “tokens used.” Value shows up in speed-to-quality, not novelty demos.

A sober view on the “AI bubble”

We also touched on market exuberance. Yes, there is speculative heat in AI investment, but practitioners shouldn’t confuse capital cycles with practical utility. For patent work, the opportunity is still underpriced in one respect: firms that master corpus curation, private inference, and disciplined review can produce more consistent quality at a pace that conventional teams cannot match. The moat is not the model; it’s the operating discipline wrapped around it.

Central thought: craft, accelerated

The craft of patent practice — problem framing, claim strategy, enablement, and argument — remains human. Generative AI, properly governed, amplifies that craft by compressing the steps between insight and expression. Our discussion with Sebastian Goebel reinforced that the winners won’t be the loudest adopters, but the quiet method builders: the teams who restructure work into evidence-backed, reviewable micro-tasks; who own their data; and who make every AI-assisted sentence traceable to real understanding. That is not automation of expertise — it is the systematic elevation of it.

Sebastian is an IP Subject Matter Expert on the IPBA Connect platform with the IP Business Academy

Sebastian uses the multichannel platform IPBA Connect for his topics, his law firm, and his business development. He is collaborating to increase awareness of the digitization of IP work and position himself as a pioneer in this area. There is a separate post about the collaboration, which you can find here: Sebastian Goebel on IPBA Connect.