Synthetic Data: An Introduction with Real-World Impact

Imagine a world where companies can create highly realistic data, entirely from scratch, to solve real-world problems without ever exposing personal details.

That’s where synthetic data comes into play.

At its core, synthetic data is artificially generated information that mimics real-world data, allowing businesses and researchers to train machine learning models, run simulations, or conduct studies — without the need for sensitive or proprietary datasets. With advances in machine learning, synthetic data has become an essential tool for industries looking to innovate while addressing privacy concerns and legal regulations.

To put things in perspective, consider this: financial institutions spend millions ensuring the security of their customers’ sensitive data, like credit scores and transaction histories. With synthetic data, they can generate datasets that simulate those same conditions and interactions, but without using any real customer information. This drastically reduces the risk of a data breach or a regulatory violation.

Similarly, in healthcare, the need for patient privacy is paramount, but hospitals also need vast amounts of data to develop diagnostic algorithms. Synthetic data offers a solution. A report from Gartner estimates that by 2030, synthetic data will eclipse real data in AI models, making up to 60% of the input for healthcare-related AI projects.

Let’s break this down with numbers:

- A hospital with a dataset of 100,000 patient records can generate a synthetic version with all the same statistical properties, but with none of the actual patient information.

- The financial sector, which is expected to generate over $30 billion in value from AI by 2025, uses synthetic data to simulate billions of transactions, helping with fraud detection models.

Synthetic data is not just about privacy — it’s about scalability and flexibility. For instance, when Tesla’s self-driving cars undergo virtual training, they rely heavily on synthetic data to simulate driving conditions, accidents, and road situations without risking real lives.

There are three primary types of synthetic data, each generated using different methods, based on the use case and the level of complexity required.

Fully Synthetic Data: Data that is entirely generated from scratch using models that simulate real-world conditions.

Partially Synthetic Data: Combines real and synthetic data by replacing sensitive or incomplete portions of a dataset with generated data.

Hybrid Synthetic Data: Generated using real data as a foundation, but blended with synthetic data to create larger, more diverse datasets.

The generation methods that are used more frequently are:

Statistical Models: used for fully or partially synthetic data.

Generative Adversarial Networks (GANs): used for complex image or audio generation, especially in fully synthetic datasets.

Agent-based Models: used for simulating complex systems where interactions between agents result in the overall dataset.

The table below summarize the example of use and advantages for each type of synthetic data.

| Type of Synthetic Data |

Method of Generation | Example of Use Case | Advantages |

| Fully Synthetic | GANs, Statistical Models | Training AI on synthetic faces without using real individuals’ images. | Reduces risk of privacy breaches. |

| Partially Synthetic | Statistical Models | Healthcare records with replaced patient names and addresses. | Keeps real, useful data intact while protecting identities. |

| Hybrid Synthetic | GANs + Augmentation from Real Data | Simulating rare driving events for self-driving car tests. | Combines the realism of real data with expanded synthetic possibilities. |

| Time Series Synthetic | Recurrent Neural Networks (RNNs) | Generating synthetic financial market data for algorithm trading models. | Helps model long-term dependencies. |

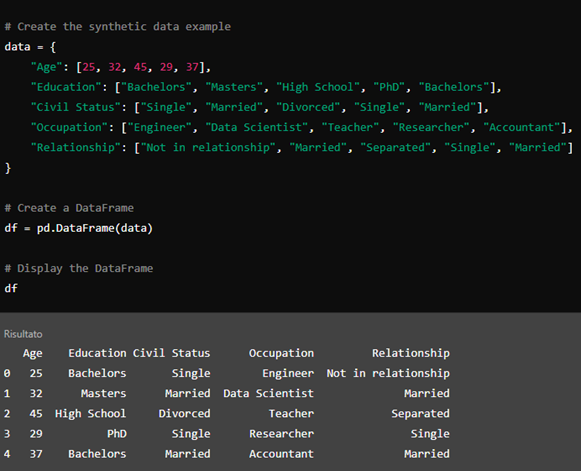

This is a small-scale example of synthetic data representing various demographics, designed to simulate real-world information without using actual personal data.

The synthetic dataset was created based on generating fictional values for each attribute, simulating realistic demographic data.

Synthetic data and IP

Synthetic data can play a significant role in addressing concerns related to intellectual property (IP) in AI and data-driven industries, for example:

1 . Avoiding IP Infringement

When companies develop AI models, they often rely on large datasets to train and improve the algorithms, which may include information that’s protected by different IP rights, and potentially infringing them. By generating synthetic data companies can sidestep these IP issues. For instance, if a proprietary dataset of customer purchasing behavior is used to train an AI, creating a synthetic version of that dataset helps reduce the risk of IP violation while still enabling the AI to learn from realistic patterns.

2 . Creating Proprietary Datasets

Companies can use synthetic data to create proprietary datasets that are distinct from existing protected ones. These new datasets can be used in machine learning without conflicting with other IP rights, giving businesses their own IP-protected assets. This is particularly valuable in industries like healthcare or finance, where datasets are often sensitive and may be protected by various legal frameworks.

3 . Freedom to Innovate

Companies can explore new markets or develop new products without being restricted by the IP constraints of existing datasets. They can create synthetic versions of datasets that capture unique scenarios, helping them expand into new areas of research or product development without infringing on existing patents or proprietary datasets.

Future Challenges

Despite its clear advantages, the use of synthetic data still presents several challenges. One key issue is ensuring the fidelity of synthetic data, meaning how closely it resembles the original data, particularly in capturing subtle nuances and correlations. Additionally, synthetic data’s ability to handle extreme or rare situations, which may be underrepresented in the original data, remains an area that requires further improvement.

As the technology evolves, the widespread adoption of synthetic data is expected to address not only legal issues surrounding intellectual property but also help foster an environment where AI can grow ethically and in full compliance with regulations. The development of more advanced techniques for creating high-fidelity, representative data will be crucial for these future advancements.

About the Author

Marco De Biase is a Qualified Italian Patent Attorney and partially qualified European Patent Attorney. He has a degree in physics from the Università degli Studi di L’Aquila and gained post-graduation experience at the Lamel Institute of CNR in Bologna. Since 2020 he is working as a Patent Attorney at De Tullio & Partners.

Marco De Biase is a Qualified Italian Patent Attorney and partially qualified European Patent Attorney. He has a degree in physics from the Università degli Studi di L’Aquila and gained post-graduation experience at the Lamel Institute of CNR in Bologna. Since 2020 he is working as a Patent Attorney at De Tullio & Partners.